RTX 4090 vs H100: Performance and Cost Breakdown

Compare the RTX 4090 and H100 GPUs to determine the best fit for your AI workloads based on performance, cost, and scalability.

- AI

- GPUs

- Performance

RTX 4090 vs H100: Performance and Cost Breakdown

Looking for the best GPU for your AI workloads? The NVIDIA RTX 4090 and H100 are two standout options, but they serve very different purposes. Here's a quick overview:

- RTX 4090: A consumer-grade GPU with 24GB GDDR6X memory. Great for gaming, content creation, and small-to-medium AI tasks. Costs around $1,599 and offers excellent price-to-performance for researchers and smaller teams.

- H100: An enterprise-grade powerhouse with 80GB HBM3 memory and advanced AI features. Built for large-scale AI training and deployment. Priced at $25,000+, it’s ideal for data centers and high-end applications.

Quick Comparison

| Feature | RTX 4090 | H100 |

|---|---|---|

| Memory | 24GB GDDR6X | 80GB HBM3 |

| Memory Bandwidth | 1,008 GB/s | 3,350 GB/s |

| FP32 Performance | 83 TFLOPS | 67 TFLOPS |

| Tensor Performance | 165 TFLOPS | 1,979 TFLOPS |

| Power Consumption | 450W | 700W |

| Price | $1,599 | $25,000+ |

| Cloud Rate (Hourly) | $0.50–$1.20 | $4.00–$8.00 |

The RTX 4090 is perfect for budget-conscious users and smaller projects, while the H100 is the go-to for large-scale, enterprise-level AI tasks. Your choice depends on your workload size, budget, and scalability needs.

GPU Rental Demo on Hyperbolic | Rent GPUs in under 1 min! | H200, H100, RTX 4090, 3080 + 3070s

Hyperbolic

Hyperbolic

::: @iframe https://www.youtube.com/embed/qaoxnzWsY7E :::

NVIDIA RTX 4090: Specs and AI Performance

NVIDIA

NVIDIA

The RTX 4090 stands as NVIDIA's flagship consumer GPU, built on the advanced Ada Lovelace architecture. While it's primarily known for its gaming prowess, it has also found a place in AI research and prototyping, particularly among some U.S.-based research teams.

Technical Specs

At its core, the RTX 4090 is powered by the AD102 GPU, packing an impressive 16,384 CUDA cores capable of efficiently handling neural network computations. It comes with 24GB of GDDR6X memory on a 384-bit bus, offering a memory bandwidth of approximately 1,008 GB/s. This generous memory capacity is ideal for running moderately sized models and datasets with fewer interruptions for memory management.

The Ada Lovelace architecture brings in third-generation RT cores and fourth-generation Tensor cores, both optimized to improve AI inference tasks. The card operates with a total graphics power (TGP) of 450 watts and runs at a base clock of 2,230 MHz, which can boost up to 2,520 MHz depending on the workload. In terms of raw computational power, the RTX 4090 delivers around 165 teraFLOPS for FP16 operations and 83 teraFLOPS for FP32 computations, making it a strong contender for mixed precision training in modern AI frameworks.

AI Performance Benchmarks

When it comes to AI workloads, the RTX 4090 performs impressively. Benchmarks show that in PyTorch training scenarios, the card handles models like ResNet-50 with ease when using mixed precision. For natural language processing tasks, it can fine-tune models on datasets like GLUE efficiently. However, very large transformer models may require techniques like model sharding or gradient checkpointing to work within the card's memory constraints.

On the inference side, the RTX 4090 excels in optimized frameworks, delivering solid performance for language models and computer vision tasks. For example, it handles YOLO-based object detection tasks efficiently. That said, in workloads involving attention mechanisms, such as transformer models, memory bandwidth often becomes a bottleneck rather than compute power. Despite this, the card supports a wide range of AI applications effectively.

Common Use Cases

The RTX 4090 is versatile, making it a popular choice for various AI applications. In research and prototyping, its combination of high memory capacity and powerful compute performance allows data scientists to experiment with medium-scale models before transitioning to more enterprise-focused solutions. It’s particularly strong in fine-tuning pre-trained models, whether it’s adapting Stable Diffusion for creative projects or customizing language models for specific industries.

Computer vision tasks also benefit from the card’s capabilities. It handles training for custom object detection and image segmentation models efficiently, supporting typical batch sizes for convolutional neural networks. Content creators, too, have embraced the RTX 4090 for tasks like real-time AI-driven image generation, video upscaling, and even aspects of 3D rendering.

That said, the card does have its limitations. It’s less suited for large-scale distributed training since it lacks NVLink interconnects, which means multi-GPU setups depend on PCIe bandwidth. This can become a bottleneck in more extensive configurations. Additionally, while the RTX 4090 performs well for inference tasks, it lacks some of the reliability features and enterprise-level support required for production environments.

NVIDIA H100: Specs and AI Performance

The NVIDIA H100 is a powerhouse GPU built on the Hopper architecture, tailored for enterprise AI workloads and high-performance computing. It’s designed to handle large-scale machine learning tasks that go far beyond what consumer GPUs can manage.

Technical Specs

At the heart of the H100 is the GH100 GPU die, boasting 16,896 CUDA cores and 528 fourth-generation Tensor cores optimized for AI tasks. It pairs these with 80 GB of HBM3 memory, connected through a 5,120-bit memory interface, offering a staggering 3,350 GB/s of memory bandwidth.

The Hopper architecture introduces the Transformer Engine, which dynamically adjusts precision between FP16, BF16, and FP8 to boost training speeds while preserving accuracy. However, this performance comes at a cost - its 700-watt power consumption for the SXM5 version underscores its enterprise-level capabilities. In terms of raw computational power, the H100 delivers:

- 1,979 teraFLOPS for FP16 operations

- 989 teraFLOPS for FP32

- 67 teraFLOPS for FP64

Connectivity is another strong suit, with NVLink 4.0 providing 900 GB/s of inter-GPU bandwidth, enabling seamless communication between multiple GPUs. This makes it ideal for distributed training setups. The Multi-Instance GPU (MIG) feature allows the H100 to be split into up to seven independent GPU instances, each with its own compute and memory resources. These features make it a standout for both training and inference tasks.

AI Performance Benchmarks

The H100’s specs translate into exceptional performance across a variety of AI workloads. For large-scale language models, it’s built to handle training for models with hundreds of billions of parameters. Training times for massive transformer models are significantly reduced compared to previous-generation GPUs.

When it comes to inference, the H100 shines in serving large language models and recommendation systems. Its high memory capacity can store entire models, eliminating the need to split them across multiple GPUs. The ability to process multiple inference requests simultaneously makes it perfect for production environments.

The Hopper architecture also includes sparsity support, speeding up inference for models with structured sparse weights. Additionally, DPX instructions enhance performance for dynamic programming tasks, such as those found in genomics, quantum computing, and graph analytics, broadening its capabilities beyond traditional AI applications.

Common Use Cases

The H100 is a go-to choice for enterprise-level AI training and deployment. Tech giants rely on clusters of H100 GPUs to train foundational models, including large language models, computer vision systems, and multimodal AI. Its capabilities extend to high-performance computing tasks like weather simulations and scientific modeling.

Cloud providers also offer H100-powered instances for customers running demanding applications, from real-time recommendation engines to advanced natural language processing. However, its power requirements and enterprise-only availability make it suitable exclusively for data centers. The high cost and need for specialized cooling and infrastructure put it out of reach for individual researchers or smaller teams.

It’s worth noting that export restrictions limit the H100’s availability in certain regions. That, combined with its specialized requirements, sets it apart from consumer GPUs, firmly positioning it as a tool for large-scale enterprise deployments.

Cost Analysis and Cloud Pricing

When choosing between the RTX 4090 and H100, cost plays a significant role in shaping the decision. These GPUs cater to different audiences and use cases, which is reflected in their pricing structures. Understanding these financial aspects is key to determining which option aligns with your budget and project goals.

Purchase Costs vs. Cloud Rental Rates

The RTX 4090 is designed for the consumer market, making it a more affordable choice for individual researchers and smaller teams. However, its price can fluctuate due to market trends. On the other hand, the H100 is built for enterprise-level applications and comes with a much steeper price tag. Variants of the H100 with enhanced interconnect capabilities can push costs even higher, and deploying these GPUs often requires additional spending on high-performance servers and advanced cooling systems.

Cloud rental rates also differ significantly between the two. Instances using the RTX 4090 are generally more budget-friendly, while H100-powered instances command premium hourly rates. Flexible pricing options like spot instances can reduce costs, but availability may be unpredictable. These pricing differences are critical when planning your budget.

Budget Planning Impact

The RTX 4090 is a practical choice for those with modest budgets, even when factoring in the cost of supporting hardware. This makes it especially appealing to startups, academic researchers, and individual practitioners. In contrast, deploying the H100 involves a much larger financial commitment due to the need for enterprise-grade infrastructure, including specialized servers and networking equipment. Multi-GPU setups with the H100 can further escalate costs, positioning it firmly as an enterprise solution.

For teams with varying workloads, a hybrid approach can strike a balance. Many opt to use RTX 4090 systems for development and prototyping while reserving H100 cloud instances for intensive training tasks. This strategy helps manage fixed costs while still enabling access to high-performance resources when required. Cost-per-performance also depends on the workload: the RTX 4090 often provides excellent value for inference tasks and smaller models, whereas the H100 shines in large-scale training scenarios thanks to its superior memory capacity and bandwidth, despite its higher hourly rates. For short-term projects, renting cloud instances can be more economical, whereas long-term, continuous usage may justify the upfront investment in purchasing a GPU. The RTX 4090 typically reaches its break-even point faster than the H100, making it a more cost-effective choice for sustained use. Staying informed about pricing trends is essential to navigate these dynamics effectively.

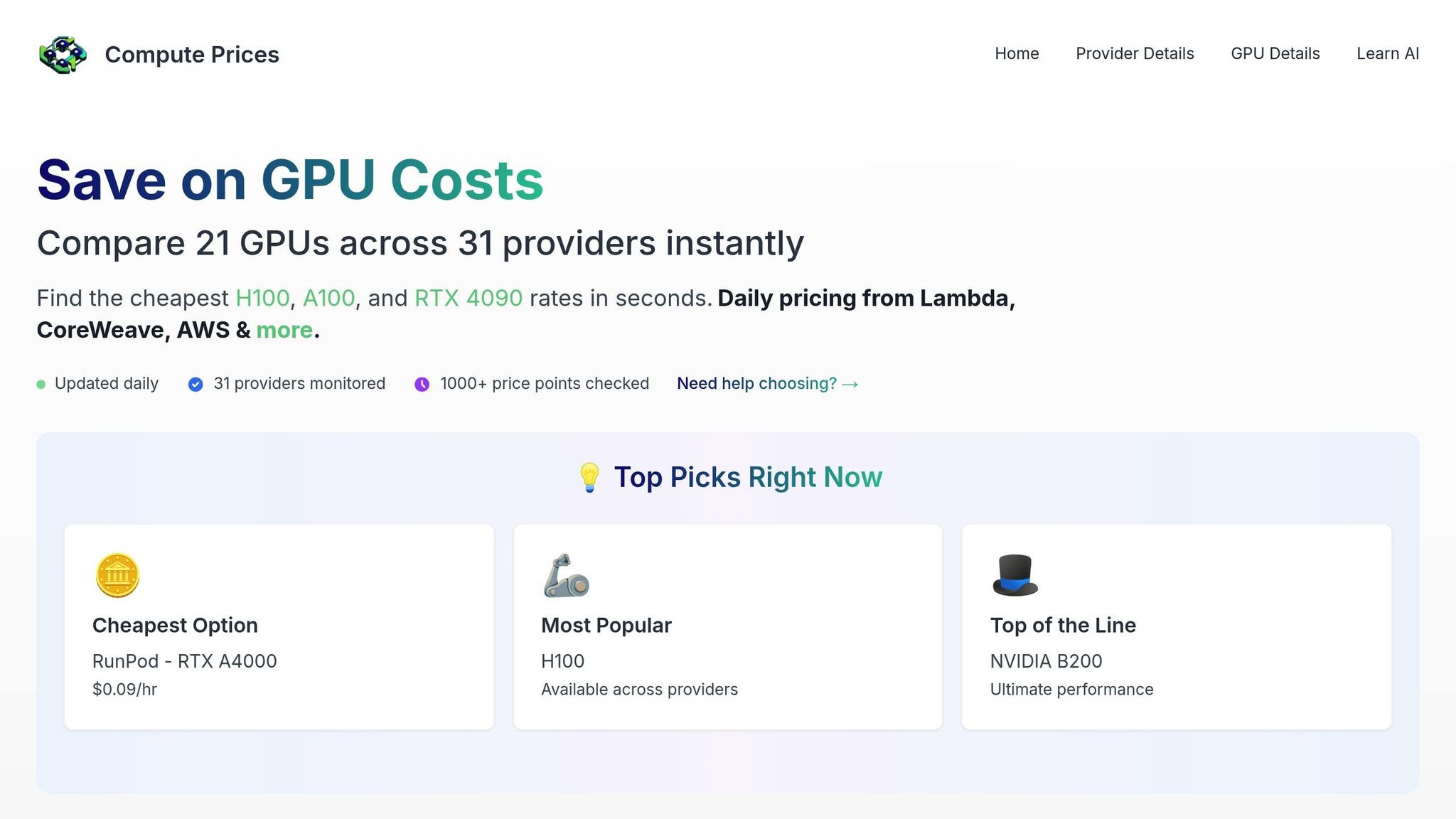

Using ComputePrices.com for Price Tracking

ComputePrices.com

ComputePrices.com

To stay on top of GPU costs, ComputePrices.com is a valuable tool. This platform tracks pricing across 31 cloud providers and monitors over 1,000 price points daily. It offers detailed data for both the RTX 4090 and H100, helping users pinpoint the most cost-efficient options for their needs.

With features like budget filtering and use-case-specific recommendations, ComputePrices.com provides real-time updates to reflect market changes and spot pricing opportunities. The platform also includes detailed provider specifications and performance benchmarks, offering a well-rounded view to support informed decisions about AI infrastructure investments.

sbb-itb-dd6066c

Performance and Cost Comparison Table

Table Metrics

When comparing the RTX 4090 and H100 GPUs, several key differences emerge in terms of performance and cost. The table below highlights the main specifications that are critical for AI workloads:

| Specification | RTX 4090 | H100 |

|---|---|---|

| Architecture | Ada Lovelace | Hopper |

| Manufacturing Process | 4nm TSMC | 4nm TSMC |

| CUDA Cores | 16,384 | 16,896 |

| Tensor Cores | 512 (4th Gen) | 456 (4th Gen) |

| Memory | 24GB GDDR6X | 80GB HBM3 |

| Memory Bandwidth | 1,008 GB/s | 3,350 GB/s |

| FP32 Performance | 83 TFLOPS | 67 TFLOPS |

| Tensor Performance (BF16) | 165 TFLOPS | 1,979 TFLOPS |

| Power Consumption | 450W | 700W |

| MSRP | $1,599 | $25,000+ |

| Typical Cloud Rate | $0.50–$1.20/hour | $4.00–$8.00/hour |

The memory and bandwidth differences between these GPUs clearly indicate their intended use cases. The RTX 4090's 24GB of memory is more than adequate for many standard AI tasks, while the H100's 80GB HBM3 memory is designed for handling much larger models without running into memory limitations. Additionally, the H100's memory bandwidth is over three times that of the RTX 4090, making it ideal for data-heavy workflows. However, the H100's 700W power consumption reflects its enterprise-grade focus, compared to the RTX 4090's more power-efficient 450W. These differences set the stage for a deeper look into their cost and performance trade-offs.

Cost vs. Performance Analysis

Taking a closer look at the specifications, the RTX 4090 emerges as a more cost-efficient option for FP32 workloads, delivering approximately 52 TFLOPS per $1,000 compared to just 2.7 TFLOPS for the H100. This makes the RTX 4090 a better choice for traditional compute-heavy tasks when budget is a concern.

For tensor operations, the RTX 4090 provides roughly 103 TFLOPS per $1,000, while the H100 delivers around 79 TFLOPS per $1,000. While the RTX 4090 offers better tensor performance per dollar, the H100's overall performance and larger memory capacity make it the go-to option for training much larger models.

When it comes to cloud rental costs, the differences are equally stark. Renting an RTX 4090 instance typically costs between $12 and $29 per day for continuous use, whereas an H100 instance ranges from $96 to $192 per day. For smaller-scale model inference tasks, the RTX 4090 provides significant savings, costing about one-sixth of what the H100 would.

For projects requiring under 200 compute hours, the RTX 4090 is the clear winner in terms of cost-effectiveness. However, for sustained workloads, the H100 becomes the better investment after about 50–75 hours of continuous use, thanks to its superior performance and ability to handle larger-scale models efficiently.

AI Workload Suitability and Optimization

Best GPU Choice for AI Tasks

Choosing between the RTX 4090 and H100 depends on your workload size, budget, and scalability needs. For small to medium-scale inference tasks, the RTX 4090 strikes a good balance between performance and cost. It’s particularly effective for moderate inference and computer vision workloads.

On the other hand, for large-scale models and high-throughput training, the H100 is built to handle demanding workloads. Its advanced architecture can streamline operations, often reducing the need for multiple GPUs. To get the most out of your AI infrastructure, tailor your hardware choice to your specific requirements.

AI Infrastructure Optimization Tips

Once you’ve selected your GPU, use these strategies to boost performance and manage costs effectively:

-

Assess your model size: Identify the maximum size your models will reach and account for additional memory needed for intermediate computations. The RTX 4090 is a great option for workloads with moderate memory needs. However, if your applications demand significant memory and computational power, the H100 is better suited for the job.

-

Optimize batch sizes: Adjust batch sizes to fully utilize GPU capacity and align tasks with your hardware’s strengths. This fine-tuning can greatly improve efficiency.

-

Adopt mixed precision training: Mixed precision training can enhance performance on both the RTX 4090 and H100, allowing you to process data faster without sacrificing accuracy.

-

Monitor GPU costs: Beyond upfront hardware expenses or rental fees, keep an eye on daily GPU pricing through tools like ComputePrices.com. These insights can help you identify cost-saving opportunities and determine the best times to rent or purchase GPUs.

For projects that exceed the capacity of a single GPU, compare the benefits of a multi-RTX 4090 setup to a single H100. While multiple GPUs can offer scalability, a single H100 often simplifies deployment and reduces management overhead.

Lastly, consider long-term ownership costs, such as power consumption and usage patterns, to avoid hidden expenses. By leveraging each GPU’s unique features, you can maximize your return on investment and ensure efficient operations.

Conclusion

Choosing the right GPU boils down to two main factors: the scale of your workload and your budget. The RTX 4090 is a solid pick for small to medium projects, offering reliable performance at a more approachable price. On the other hand, the H100 is built for enterprise-level AI tasks, boasting superior memory bandwidth, an advanced Transformer Engine, and an architecture tailored for large language models.

Cost is another critical piece of the puzzle. For many users, the RTX 4090’s lower price and reduced power consumption make it the practical choice. However, if your workloads require the H100’s advanced capabilities, the higher upfront cost can pay off with faster training times and better overall efficiency. Think about your current needs and where you’re headed - if you’re starting with smaller models but plan to scale up, it might be smarter to invest in high-performance hardware now rather than upgrading later. Alternatively, cloud rental services provide a flexible option for handling fluctuating compute demands.

Keeping an eye on pricing trends can also help you save. Websites like ComputePrices.com track thousands of price points across major providers daily, giving you the tools to time your purchases or rentals and potentially save a significant amount on your AI infrastructure.

Finally, remember that hardware is only part of the equation. Smart optimization techniques - like proper batch sizing, mixed precision training, and efficient memory management - can enhance performance on both GPUs. Combining the right hardware with these practices ensures you get the most out of your investment while achieving your AI goals more effectively.

FAQs

::: faq

How do the memory and bandwidth of the RTX 4090 and H100 compare, and what impact do these have on AI performance?

The RTX 4090 is equipped with 24GB of GDDR6 memory and delivers a bandwidth of 1,008 GB/s. On the other hand, the H100 boasts 80GB of HBM3 memory with a staggering bandwidth of about 3.35 TB/s. This massive gap in memory bandwidth gives the H100 a clear advantage when it comes to handling larger datasets and supporting higher data throughput.

For AI workloads - particularly those involving large-scale models or demanding training and inference tasks - the H100's superior bandwidth translates to faster data processing and fewer bottlenecks. While the RTX 4090 is a powerhouse for consumer applications, the H100 is purpose-built for enterprise-level AI tasks, making it the go-to choice for complex and large-scale AI systems. :::

::: faq

Which GPU is more cost-effective for long-term AI projects: the RTX 4090 or the H100, and when should you choose one over the other?

The RTX 4090 stands out as a solid option for small to medium-scale AI projects, balancing performance and cost effectively at approximately $1,600. It’s a great fit for teams or organizations working with limited budgets or tasks that don’t require the heavy-duty features of enterprise-grade hardware.

In contrast, the H100 - with a price range of $27,000 to $40,000 - is built for large-scale, enterprise-level AI workloads. Its advanced capabilities, including higher memory capacity and immense computational power, make it the go-to choice for managing massive AI models or expanding infrastructure to handle demanding tasks.

For long-term projects, the RTX 4090 often proves more economical unless your work involves the kind of high memory bandwidth and processing power that only the H100 can deliver - features critical for large-scale AI operations. :::

::: faq

Is the RTX 4090 powerful enough for large-scale AI models, or is the NVIDIA H100 a better choice?

The RTX 4090 delivers impressive performance for small to medium-scale AI projects. It's well-suited for tasks like training and inference, thanks to its strong processing capabilities and efficiency. However, when it comes to large-scale AI models, the NVIDIA H100 takes the lead. With its advanced Tensor Cores, larger memory capacity, and exceptional scalability, the H100 is designed to tackle more complex and demanding workloads.

The H100 also offers much faster training and inference speeds, making it a go-to option for building high-performance AI infrastructure. While the RTX 4090 works well for smaller-scale tasks, the H100 is the clear choice for large-scale applications that demand top-tier performance and flexibility. :::