How to Choose the Right GPU for Machine Learning

Learn how to select the ideal GPU for machine learning by balancing performance, memory, power efficiency, and budget considerations.

- AI

- GPUs

- Performance

How to Choose the Right GPU for Machine Learning

Choosing the right GPU for machine learning is all about balancing performance, memory, power efficiency, and cost. The wrong GPU can slow down your projects or waste money, especially in cloud-based setups where inefficiencies can drive up costs. Here's a quick breakdown of what to consider:

- Performance: Look at benchmarks like FLOPS, MLPerf scores, and tensor core capabilities. Enterprise GPUs like the NVIDIA A100 excel in large-scale tasks, while consumer GPUs like the RTX 4090 offer strong performance for smaller budgets.

- Memory: VRAM is critical for handling large datasets and batch sizes. Enterprise GPUs often come with high-bandwidth memory for better efficiency.

- Power Efficiency: High power draw increases cooling and operational costs. GPUs with better performance per watt save money in the long run.

- Cost: Consumer GPUs are cheaper upfront, but enterprise GPUs are built for scalability. For short-term needs, renting cloud GPUs can be more economical. Tools like ComputePrices.com help track GPU prices and find the best deals.

Quick Overview of Popular GPUs:

- NVIDIA A100: Best for large-scale training and inference; high cost.

- NVIDIA RTX 4090: Great for medium-scale tasks; balances power and price.

- AMD MI250: Ideal for memory-heavy tasks and distributed training.

Selecting the right GPU ensures faster training, efficient inference, and optimized costs. Tools like ComputePrices.com can help you compare prices and features to make the best decision for your needs.

2025 GPU Buying Guide For AI: Best Performance for Your Budget

::: @iframe https://www.youtube.com/embed/lxchTAWgpJ0 :::

Key Factors When Choosing GPUs for Machine Learning

Choosing the right GPU for machine learning involves weighing technical performance against cost. By evaluating performance benchmarks, memory capacity, power efficiency, and overall cost-effectiveness, you can align your GPU selection with both your workload needs and budget constraints. These considerations ensure the GPU you choose fits the demands of your specific machine learning tasks.

Performance Benchmarks

Performance benchmarks are critical when comparing GPUs for machine learning. Metrics like FP16/TF32 throughput, MLPerf scores, and FLOPS reveal how well a GPU can handle complex tasks. These benchmarks help you determine which models best suit your workload.

Modern GPUs are equipped with tensor cores designed to accelerate deep learning operations. While enterprise GPUs optimize these cores for maximum performance, consumer GPUs can still deliver strong results for many machine learning applications.

Memory bandwidth is another important factor, as it dictates how quickly data moves during training. Enterprise GPUs often include high-bandwidth memory, which is particularly useful for processing large datasets, offering a performance edge over the memory in many consumer-grade GPUs.

For a more direct comparison, MLPerf benchmarks measure GPU performance on tasks like image classification, object detection, and natural language processing, helping you identify the best fit for your specific use case.

Memory Capacity

The VRAM (video memory) capacity of a GPU is essential for training larger models or working with extensive datasets. The memory you need depends heavily on the batch sizes used during training. Larger batch sizes can speed up training and improve stability but require more memory, which may limit the complexity of models you can handle.

The type of memory also plays a role in performance. Enterprise GPUs often feature high-bandwidth memory designed for memory-intensive tasks, while many consumer GPUs rely on less specialized memory types, which may struggle under similar conditions. This difference impacts not only training efficiency but also ties into power consumption and cost considerations.

Power Efficiency and Operating Costs

The long-term costs of operating a GPU go beyond the initial purchase or rental price. Power consumption and heat generation can significantly affect operational expenses, especially in scenarios where GPUs run continuously or are deployed in data centers.

GPUs with higher power draw require more cooling, which can drive up costs. For heavy, ongoing workloads, selecting a GPU with better performance per watt can lead to meaningful energy savings over time, making power efficiency a key consideration.

Cost-Effectiveness

Balancing upfront costs with ongoing expenses is crucial when evaluating GPUs. Consumer GPUs are generally more affordable initially, making them a good option for smaller budgets. On the other hand, enterprise GPUs, while more expensive, are built for scalability and large-scale machine learning tasks, justifying their higher price for certain use cases.

For projects with irregular or short-term workloads, renting cloud-based GPUs can provide access to powerful hardware without requiring a large initial investment. However, for continuous or long-term operations, purchasing a GPU might offer better value in the long run.

Other factors, like utilization rates, depreciation, and resale value, also play a role in determining the total cost of ownership. By analyzing how performance scales with price, you can find the right balance between technical needs and budget limitations, ensuring your GPU choice supports both your machine learning goals and financial constraints.

Popular GPU Models for Machine Learning Compared

Choosing the right GPU for your machine learning projects can make a big difference in performance, scalability, and cost-effectiveness. Here’s a closer look at some of the top GPU models, detailing how they stack up for various machine learning tasks.

NVIDIA A100

NVIDIA A100

NVIDIA A100

The NVIDIA A100 is built for enterprise-level AI and high-performance computing. Designed with large-scale training and inference in mind, it offers advanced features like multi-instance partitioning, which lets you split the GPU into smaller, independent units to maximize efficiency. This makes it ideal for heavy-duty tasks like natural language processing, computer vision, and recommendation models. Pricing depends on the specific configuration and performance tier, but it’s geared toward organizations with significant computational needs.

NVIDIA RTX 4090

NVIDIA RTX 4090

NVIDIA RTX 4090

The RTX 4090 combines high-end consumer graphics with the ability to handle professional AI workloads. Thanks to its advanced architecture, it works seamlessly with popular machine learning frameworks, making it a strong choice for medium-scale training and inference. It strikes a good balance between performance and affordability, making it a great option for smaller research teams or individual developers who need a powerful yet reasonably priced GPU.

AMD MI250

AMD MI250

AMD MI250

AMD’s MI250 features a dual-GPU setup that enhances parallel processing and expands memory capacity. This design is particularly useful for memory-heavy tasks and distributed training. Paired with AMD’s ROCm software stack, the MI250 integrates well with major machine learning frameworks, offering a viable alternative for organizations that require scalable, multi-GPU setups for demanding AI applications.

sbb-itb-dd6066c

Matching GPUs to Your Machine Learning Tasks

Choosing the right GPU for your machine learning tasks is all about balancing performance and cost. Whether you're training large models, running inference, or working on prototypes, the hardware you pick can make or break your workflow. By aligning your GPU selection with your specific needs, you can avoid overspending on unnecessary power or dealing with frustrating performance bottlenecks.

Training Large Models

Training large models is a demanding process that calls for GPUs with substantial computing power and plenty of memory. Tasks like training transformer architectures or deep convolutional networks with high-resolution images require hardware capable of handling massive datasets and intensive calculations.

The NVIDIA A100 is a standout choice here. Its high-bandwidth memory reduces data bottlenecks, and NVLink technology enables seamless multi-GPU setups, which are essential for scaling up training on larger models. While this hardware is top-tier, it comes with a higher price tag - something to consider if you're managing costs.

For medium-scale projects, the RTX 4090 offers a more affordable yet powerful alternative. It provides enough memory and compute power for many training tasks, making it a favorite among academic labs and smaller AI startups. This card strikes a good balance between capability and cost, especially for those not working with the largest models.

Inference and Fine-Tuning Tasks

When it comes to inference and fine-tuning pre-trained models, priorities shift toward consistent performance, energy efficiency, and cost-effectiveness.

The RTX 4090 shines in inference scenarios. Its advanced tensor cores speed up common operations, and its memory capacity allows multiple model variants to run simultaneously, making it a solid option for production environments.

Fine-tuning tasks, such as tailoring models like BERT or ResNet to specific datasets, typically involve smaller batch sizes and shorter training cycles. Here, GPUs that balance memory and compute power can significantly reduce turnaround times without breaking the bank.

For continuous deployments, power efficiency becomes a critical factor. GPUs with lower energy consumption can save on operating costs over time, especially for models running 24/7. Meanwhile, development and testing environments often require a different set of priorities.

Development and Testing

Prototyping and algorithm development benefit from GPUs that offer strong performance without the steep price of enterprise-grade hardware. Mid-range options like the RTX 4070 or RTX 4080 are perfect for proof-of-concept projects and smaller experiments. They provide a good mix of capability and affordability, making them ideal for researchers and developers working on early-stage ideas.

Development workflows often involve frequent model iterations and hyperparameter tuning. Quick training and testing cycles are essential, and GPUs in this category deliver the speed needed to keep up with rapid experimentation. Memory demands are also lower during this phase since prototype models and research datasets typically fit within the capacities of these GPUs. This allows developers to focus on refining algorithms without worrying about complex memory management.

Selecting the right GPU for your workload ensures you get the performance you need without overspending. Starting with more modest hardware for initial development and scaling up as your needs grow is a smart way to optimize both resources and results.

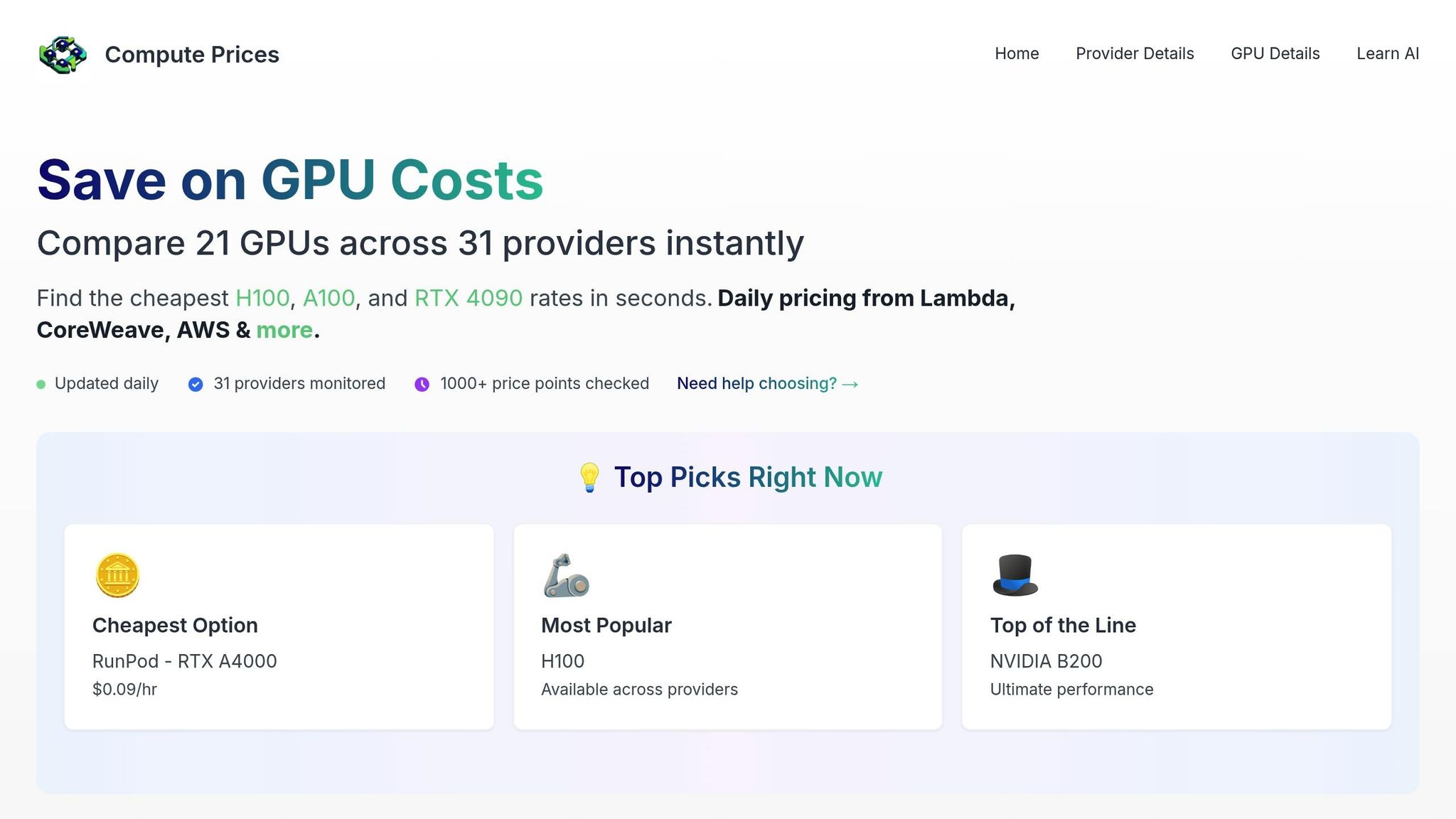

Finding the Best GPU Prices with ComputePrices.com

ComputePrices.com

ComputePrices.com

After choosing the right GPU for your machine learning tasks, the next hurdle is finding it at a price that fits your budget. Balancing cost with performance is critical, especially when GPU prices can vary significantly. That’s where ComputePrices.com steps in - offering price tracking and comparison tools tailored for AI and machine learning professionals.

This platform makes it easier to find cost-effective GPU options without the hassle of manual tracking.

Daily GPU Price Tracking

ComputePrices.com keeps tabs on 21 GPU models across 31 providers, monitoring over 1,000 price points daily[1]. This includes popular GPUs like the NVIDIA H100, A100, and RTX 4090, as well as offerings from providers such as Lambda, CoreWeave, and AWS.

Frequent price updates are essential because GPU costs in the cloud market can shift quickly. What’s affordable today might not be tomorrow. Access to real-time pricing data allows you to time your rentals strategically, saving money on larger projects.

The platform doesn’t just track prices - it also uncovers patterns that might not be immediately obvious. For instance, some providers may offer better deals for long-term commitments, while others shine with competitive spot pricing for short-term needs. This kind of insight helps you make smarter decisions based on your project’s timeline and budget.

Real-time data also powers advanced filtering tools that match GPUs to your exact requirements.

Advanced Filtering Options

ComputePrices.com’s filtering system goes beyond basic price comparisons. It allows you to refine your search based on memory capacity, performance benchmarks, and efficiency metrics - ensuring the GPUs you consider are capable of handling your workload.

For those working with tight budgets, the platform’s filters can narrow down options to only those that fit within your cost limits. You can also filter by use case, making it easy to find GPUs optimized for training or inference.

One standout feature is the VRAM filter, which is especially important for machine learning tasks. Many modern models require at least 24GB of VRAM, and the platform can help you locate GPUs that meet this requirement without overspending.

You can even compare performance across different GPU architectures. For example, evaluating the NVIDIA A100’s Ampere architecture against the older V100’s Volta architecture can help you decide if upgrading to newer technology will provide better value for your specific needs.

These refined search capabilities ensure you’re not just choosing a GPU that works but one that aligns perfectly with both your performance needs and budget.

Cost Reduction for AI Workloads

ComputePrices.com is designed to help you cut GPU costs while still accessing high-performance hardware for training and inference. Its comparison tools often highlight price differences between providers for the same hardware, revealing opportunities to save.

For budget-conscious teams, the platform’s price-to-performance analysis can be a game changer. For instance, the RTX 4090 is a strong choice for individuals and small teams, offering excellent value. Meanwhile, larger teams might find the A100 or H100 more cost-effective over time, despite their higher initial costs.

If your team requires GPUs for continuous workloads, the platform can identify providers with competitive rates for 24/7 use. This is especially critical for inference tasks, where small differences in hourly rates can add up significantly.

ComputePrices.com also sheds light on spot instance pricing, which is ideal for training jobs that can handle interruptions. Many cloud providers offer discounts for these workloads, giving you another way to save.

Conclusion: Making the Right GPU Choice

When it comes to selecting a GPU for machine learning, it all boils down to balancing compute performance, memory capacity, power efficiency, and cost. These elements collectively determine how well your GPU will meet the demands of your workload.

Compute performance, typically measured in FLOPs, and memory capacity are at the heart of a GPU's ability to handle deep learning tasks. More Tensor and CUDA cores mean faster processing, but that's only part of the story. Sufficient VRAM and bandwidth are equally critical for managing large models and datasets effectively [2][4][5]. Matching these specifications to your workload is key to optimizing performance.

Power efficiency is another important consideration. A GPU's TDP (Thermal Design Power) not only influences cooling requirements but also impacts long-term operational costs [2][3][5]. For those scaling operations, this factor can play a significant role in staying within budget.

Finally, cost-effectiveness ensures you're getting the most out of your investment. Pairing a high-end GPU like the H100 with small-scale tasks or using a T4 for massive training jobs is a mismatch that wastes resources and reduces efficiency [2][5].

This is where ComputePrices.com comes in. By quantifying these factors, the platform helps you identify the best GPU for your needs while ensuring you get the most competitive pricing. With tools for real-time pricing and filtering, along with daily updates on over 1,000 price points across 31 providers, you can make informed decisions and avoid overspending on hardware.

The right GPU strikes the perfect balance between performance and budget, ensuring you get exactly what you need without paying for what you don’t.

FAQs

::: faq

What are the main differences between the NVIDIA RTX 4090 and A100 GPUs for machine learning?

The NVIDIA A100 is a powerhouse GPU tailored for large-scale machine learning and AI workloads in enterprise settings. With up to 80GB of memory and advanced Tensor Cores, it's built to handle high-performance training, inference, and multi-tasking in data centers. Its design prioritizes efficiency and reliability, with power consumption ranging from 250 to 300 watts - making it a suitable choice for demanding enterprise environments.

On the other hand, the NVIDIA RTX 4090 is a consumer-grade GPU primarily designed for gaming and creative tasks, but it also packs serious AI capabilities. Equipped with Tensor Cores and CUDA Cores, it’s a solid option for smaller-scale AI projects or individual developers. With a price tag of about $1,800 and a power consumption of roughly 450 watts, it delivers excellent performance for personal use or budget-friendly setups.

In short, the A100 is geared toward large-scale, enterprise-level AI workloads, while the RTX 4090 offers a more affordable solution for smaller or personal machine learning needs. :::

::: faq

How do I choose a GPU that balances performance and cost for my machine learning project?

To strike the right balance between GPU performance and cost for your machine learning project, it's crucial to evaluate the computational needs of your tasks. If you're tackling demanding workloads like training deep learning models, you'll likely need high-performance GPUs such as the NVIDIA A100 or RTX 4090. On the other hand, for less intensive tasks like inference or smaller-scale projects, more affordable options - like older NVIDIA models or AMD GPUs - can get the job done.

Keep your budget in mind while considering performance requirements. If cutting-edge hardware isn't essential, choosing a GPU with lower power consumption and adequate memory can save you money without sacrificing efficiency. You can also cut costs by reducing idle GPU time and scaling resources to match your actual usage. By aligning your GPU choice with your workload and budget, you can achieve a solid performance-to-cost balance. :::

::: faq

Why is VRAM important for machine learning, and how much do I need for my projects?

VRAM is a key factor in machine learning, as it determines the amount of data and the number of model parameters your GPU can handle at any given time. This impacts training speed, the complexity of your models, and the size of data batches you can process. If your GPU doesn't have enough VRAM, you might run into out-of-memory errors or experience slower performance - especially when working with large-scale models like GPT-3.

For smaller or moderately sized machine learning projects, 8GB of VRAM is usually sufficient. However, if you're dealing with more complex models or larger datasets, you might need 16GB or more to ensure smooth performance. When selecting a GPU, think about the size of your models and datasets to strike the right balance between memory capacity and budget. :::