Top 7 Cheapest Cloud GPU Providers for AI Training

Explore affordable cloud GPU providers that offer competitive pricing and performance for AI training tasks, ideal for developers and businesses.

- AI

- GPUs

- Performance

Top 7 Cheapest Cloud GPU Providers for AI Training

Finding an affordable cloud GPU provider can be challenging, especially with the growing demand for AI training. Here's a quick rundown of 7 budget-friendly options that balance cost and performance for tasks like training neural networks and processing large datasets:

- RunPod: Offers serverless and dedicated GPU instances with flexible pay-as-you-go pricing. Data centers are in the U.S. and Europe.

- Vast.ai: A peer-to-peer GPU marketplace with dynamic pricing and a bidding system for competitive rates.

- TensorDock: Straightforward hourly and monthly pricing with no setup fees. Data centers in the U.S. and Europe.

- Genesis Cloud: Pay-as-you-go pricing with discounts for long-term use. Focuses on NVIDIA GPUs and European compliance.

- Lambda Labs: Per-second billing with pre-configured instances for quick deployment. Supports NVIDIA A100 and V100 GPUs.

- Oracle Cloud (OCI): Enterprise-grade GPU solutions with flexible pricing plans and global data center coverage.

- Paperspace: Pay-per-second billing with intuitive tools for cost tracking. Features NVIDIA RTX and A100 GPUs.

Quick Comparison

| Provider | Pricing Model | GPU Options | Data Centers | Billing Features |

|---|---|---|---|---|

| RunPod | Pay-as-you-go | Various NVIDIA GPUs | U.S., Europe | Real-time usage tracking |

| Vast.ai | Dynamic (bidding) | Peer-to-peer GPUs | Global (varies) | Prepaid credit system |

| TensorDock | Hourly/Monthly | Tiered NVIDIA GPUs | U.S., Europe | Minute-based billing |

| Genesis | Pay-as-you-go | NVIDIA GPUs | U.S., Europe | Credit-based with discounts |

| Lambda | Per-second billing | A100, V100, RTX GPUs | U.S. | Usage analytics, pre-funded |

| Oracle OCI | Flexible plans | Multi-GPU setups | Global | Cost management tools |

| Paperspace | Pay-per-second | RTX, A100, V100 GPUs | U.S., Europe | Real-time cost tracking |

Each provider has unique strengths, from flexible billing to specialized GPU configurations. Choose based on your budget, workload scale, and location needs.

Best GPU Providers for AI: Save Big with RunPod, Krutrim & More

::: @iframe https://www.youtube.com/embed/XcNodkQrkl0 :::

1. RunPod

RunPod provides cloud-based GPU solutions designed to support AI training while keeping costs manageable. Whether you're looking for serverless GPU instances or dedicated pods, RunPod offers options that fit a variety of project demands. Here’s a closer look at what it brings to the table.

Pricing (Hourly/Monthly)

RunPod uses a credit-based, pay-as-you-go system. This flexible approach allows customers to choose configurations that balance performance needs with their budget.

GPU Models and Specs

RunPod offers a range of GPU options, from budget-friendly models to high-performance setups. Each GPU is paired with the right CPU and memory to ensure smooth and efficient operation, regardless of the workload.

Data Center Locations

RunPod’s data centers are strategically located in the U.S. and Europe. This setup minimizes latency and ensures reliable access to computational resources for users in these regions.

Billing Options

RunPod provides real-time usage tracking, making it easy to monitor costs. Payments can be made using major credit cards or online payment services, ensuring convenience for customers.

2. Vast.ai

Vast.ai operates as a peer-to-peer GPU marketplace, connecting those in need of computing power with providers who have idle GPUs. This setup encourages competitive pricing, making it a budget-friendly choice for AI developers and businesses aiming to reduce training costs. Let’s break down how Vast.ai works, from pricing to GPU options.

Pricing

Vast.ai uses a dynamic pricing model that shifts based on supply and demand. Users can also set a maximum price through a bidding system, ensuring access to competitive rates that align with their budget.

GPU Options and Specifications

With a network of hardware providers, Vast.ai offers a variety of GPU options tailored to different AI training needs. Each listing comes with detailed specs, helping users choose the right balance of performance and cost for their projects.

Billing System

Vast.ai uses a prepaid credit system, charging only for the time GPUs are actively in use. This straightforward approach helps users manage their resources efficiently without overspending.

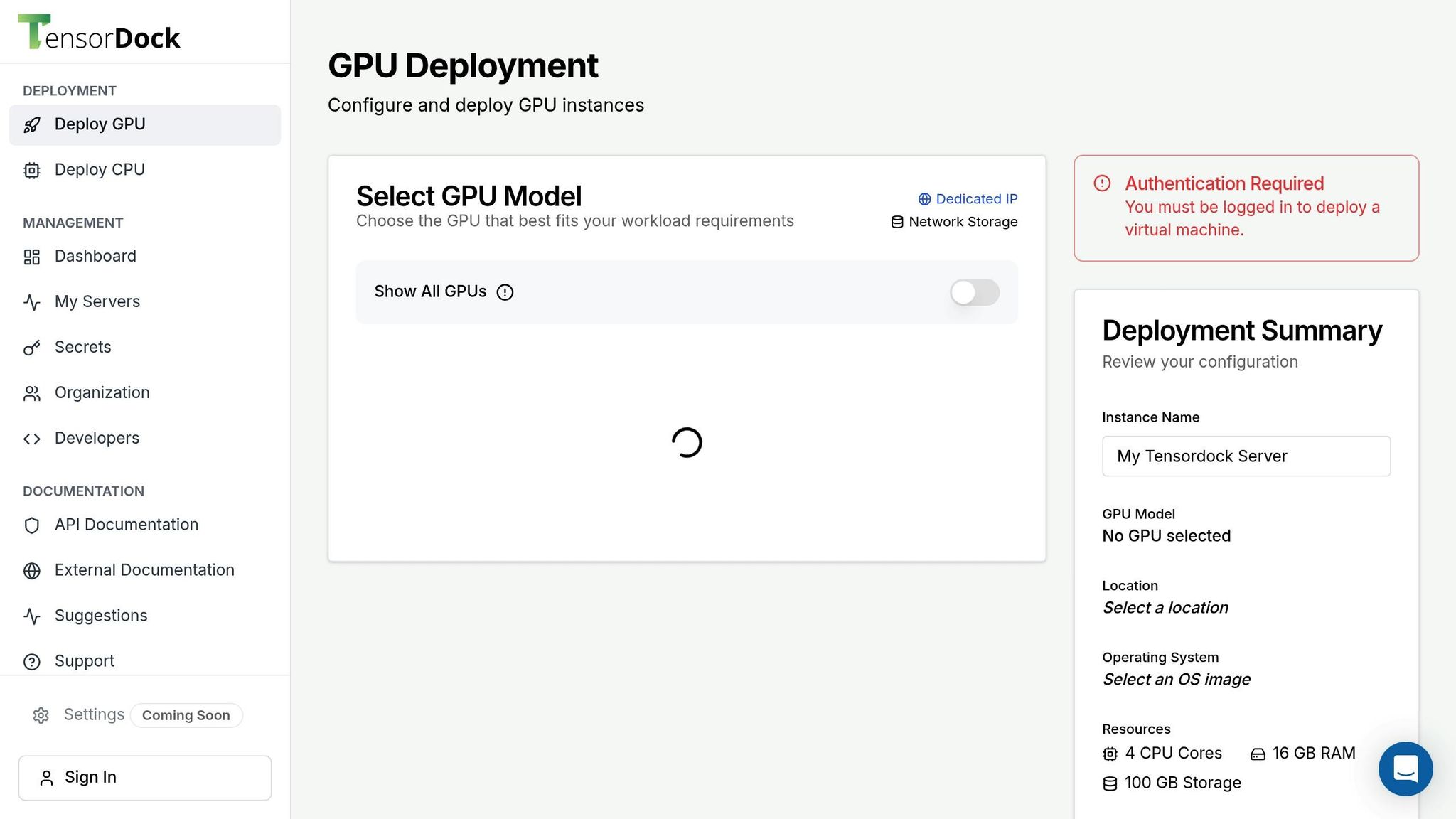

3. TensorDock

TensorDock

TensorDock

TensorDock is a cloud GPU provider that stands out for its straightforward pricing and quick deployment. It's particularly tailored to developers and small teams working on AI projects.

Pricing (Hourly/Monthly)

TensorDock keeps things simple with flat hourly rates and monthly plans for longer training sessions. There are no setup fees or minimum usage requirements, making it a flexible choice for projects of all sizes.

GPU Models and Specs

The platform offers a variety of GPU options, each paired with dedicated CPU and RAM configurations that scale according to the GPU tier. To handle demanding AI workloads, TensorDock also includes fast NVMe SSD storage.

Data Center Locations

TensorDock operates data centers in strategically chosen regions, including the US East Coast, US West Coast, and Europe. This setup allows users to pick a location that reduces latency and aligns with regional performance needs, although availability may depend on demand.

Billing Options

Billing is handled through a prepaid credit system, with payments accepted via credit cards, PayPal, or cryptocurrency. Charges are calculated per minute, starting from instance launch and ending at termination. Tools like real-time usage monitoring, spending alerts, and automatic termination help users stay within budget and avoid unexpected costs.

4. Genesis Cloud

Genesis Cloud

Genesis Cloud

Genesis Cloud is a GPU provider tailored for developers, offering AI training solutions that strike a balance between performance and affordability. It's a great option for teams working with tighter budgets but still needing reliable hardware.

Pricing (Hourly/Monthly)

Genesis Cloud uses a straightforward pay-as-you-go model. Charges start the moment an instance is launched, and costs are calculated based on compute time, storage, and data transfer. For those who commit to longer usage, discounts are available, making it even more budget-friendly. This flexible pricing pairs well with their top-tier hardware options.

GPU Models and Specs

The platform features NVIDIA GPUs, specifically designed for machine learning tasks. These GPUs are paired with dedicated CPUs, fast memory, and high-speed storage to minimize bottlenecks. Drivers come pre-installed, and high-bandwidth networking ensures smooth compatibility with popular frameworks like PyTorch, TensorFlow, and JAX.

Data Center Locations

Genesis Cloud hosts its data centers in North America and Europe. This setup helps reduce latency and ensures compliance with local data protection regulations, which is crucial for distributed training workflows.

Billing Options

Genesis Cloud operates on a credit-based billing system where users pre-fund their accounts. To help manage expenses, instances can automatically shut down when budgets are met or when idle time is detected. For enterprise customers, monthly invoicing is also available, offering additional convenience for larger teams.

sbb-itb-dd6066c

5. Lambda Labs

Lambda Labs

Lambda Labs

Lambda Labs delivers cloud GPU services tailored for machine learning tasks, offering a developer-friendly experience with straightforward setup and competitive pricing. It's a solid choice for scaling AI training efficiently.

Pricing (Hourly/Monthly)

Lambda Labs uses a per-second billing model, meaning you only pay for the exact compute time you need. This approach can be especially cost-effective for shorter training sessions or experimental projects. They offer both on-demand pricing and reserved instances, with the latter providing up to 50% savings for longer-term commitments.

Their GPU instances are priced competitively, and there are no data transfer fees between instances in the same region. This can significantly reduce costs for distributed training setups that rely on frequent communication between nodes.

GPU Models and Specs

The platform supports NVIDIA A100, V100, and RTX series GPUs, all fine-tuned for machine learning workloads. A100 instances are available with either 40GB or 80GB of GPU memory, making them ideal for training large-scale language models and complex neural networks. Each instance includes high-performance NVMe SSD storage and fast networking to minimize bottlenecks during data-intensive tasks.

To save time, instances come pre-configured with popular ML frameworks like PyTorch, TensorFlow, and JAX. CUDA drivers and libraries are also pre-installed and optimized, letting you skip the tedious setup process and start training models within minutes.

Data Center Locations

Lambda Labs runs its data centers primarily in the United States, strategically located to ensure low-latency access to major metropolitan areas. These facilities are specifically built for machine learning workloads, equipped with high-bandwidth networking and redundant power systems for reliable performance during extended training sessions.

Billing Options

Payments are flexible and simple. Lambda Labs accepts major credit cards and provides enterprise billing options for organizations that prefer monthly invoicing. Pre-funded account credits are available and don't expire, giving teams the freedom to manage budgets effectively. Detailed usage analytics are included, helping you track spending across projects and team members with ease.

6. Oracle Cloud (OCI)

Oracle Cloud

Oracle Cloud

Oracle Cloud Infrastructure (OCI) delivers dependable GPU solutions tailored for AI training, with a clear emphasis on meeting enterprise demands. While Oracle's reputation is firmly rooted in databases and software solutions, its cloud GPU offerings stand out for handling demanding AI workloads without driving up expenses.

Pricing (Hourly/Monthly)

Oracle Cloud provides flexible pricing options, including pay-as-you-go and committed use plans. These options are designed to help businesses manage costs effectively, especially for long-term workloads. For the latest details, check Oracle's pricing page.

GPU Models and Specs

Oracle Cloud supports a variety of GPU configurations, including setups with multiple GPUs. These are fine-tuned for popular machine learning frameworks, making them well-suited for data-intensive AI training tasks.

Data Center Locations

With a global network of data centers, including several in the U.S., Oracle Cloud reduces latency and ensures compliance with regional data regulations. This extensive reach supports a wide range of enterprise needs.

Billing Options

Oracle Cloud offers multiple billing methods, from credit card payments to enterprise-level billing solutions. Its built-in cost management tools make it easier to monitor resource usage and control spending. This structured billing approach aligns with the cost-conscious strategies explored earlier in this guide.

7. Paperspace

Paperspace is a cloud GPU provider that prioritizes ease of use and accessibility, making it a great choice for developers and small teams working on AI training projects. It eliminates the need for complex enterprise setups, offering a straightforward solution for GPU computing.

Pricing (Hourly/Monthly)

Paperspace keeps costs manageable with its pay-per-second billing, ensuring you’re only charged for the time you actively use the resources. GPU instance pricing ranges from $0.45 to $2.30 per hour, depending on the GPU model and configuration.

For those looking for subscription options, Gradient plans start at $8.00/month for basic use, while professional tiers are available at $39.00/month.

GPU Models and Specs

Paperspace offers a variety of GPU models designed for different AI workloads. Their lineup includes NVIDIA RTX A4000, RTX A5000, RTX A6000, as well as V100 and A100 instances for more intensive tasks.

- RTX A4000: Equipped with 16 GB of VRAM, ideal for smaller models and experimentation.

- A100 instances: Feature 40 GB of high-bandwidth memory, delivering top-tier performance for deep learning and transformer models.

Each instance is paired with optimized CPU and memory configurations to ensure seamless performance.

Data Center Locations

Paperspace operates data centers in New York, California, and Amsterdam, providing excellent coverage across North America and Europe. The U.S. facilities are specifically tuned for AI workloads, with high-speed networking and storage systems that reduce data transfer delays, keeping GPU utilization high during training.

Billing Options

Paperspace supports major credit cards and offers real-time cost tracking, so you can monitor expenses as you go. This transparency helps prevent unexpected charges.

For teams and organizations, the platform provides consolidated billing with detailed usage reports. Additional features like spending alerts and automatic instance shutdowns make it easier to manage budgets and control costs effectively.

Provider Comparison: Pros and Cons

Choosing the right provider for your AI training workload can be challenging, as each one brings different strengths and pricing structures to the table. For the most up-to-date information, visit ComputePrices.com. Below is a breakdown of the key advantages and potential drawbacks of each provider, along with notable features to keep in mind.

RunPod stands out for its affordable pricing and variety of GPU options. It offers flexibility through serverless and dedicated pod models, making it suitable for a range of workloads.

Vast.ai uses a peer-to-peer marketplace model, allowing users to benefit from competitive pricing via a bidding system. However, this unique approach may raise questions about service reliability compared to traditional cloud providers.

TensorDock is known for its cost-effective solutions and developer-friendly tools, such as API support for scalable workflows. If you have specific regional needs, it's worth checking the availability of their services in your area.

Genesis Cloud caters to organizations with strict regulatory requirements, focusing on European compliance and data sovereignty. However, its focus on Europe might pose challenges for businesses needing global scalability.

Lambda Labs is a favorite among researchers, offering machine learning solutions that simplify setup. Features like per-second billing and pre-configured frameworks help reduce costs and speed up deployment.

Oracle Cloud (OCI) provides enterprise-grade infrastructure with extensive support, making it a strong choice for businesses seeking high performance and integration with established cloud ecosystems.

Paperspace is designed with ease of use in mind, making it a great option for teams new to cloud GPU computing. Its intuitive interface and real-time cost tracking make managing budgets much simpler.

Additional Considerations

Providers offer various billing models, including pay-as-you-go and committed-use plans, with standard tools for cost transparency. Data center locations vary, which can impact both performance and compliance with regulations. Additionally, GPU performance consistency may differ between enterprise-centric solutions and marketplace-based models.

This overview is designed to help you weigh costs against capabilities, ensuring your AI training workloads run smoothly. For more detailed and current insights, visit ComputePrices.com.

Final Recommendations

Choose a cloud GPU provider that aligns with your unique requirements, budget, and technical goals. ComputePrices.com offers daily price comparisons for 21 GPUs across 31 providers, making it easier to identify affordable options.

When evaluating providers, think about factors like the scale of your workload, performance expectations, and billing flexibility. Leverage ComputePrices.com's filtering tools to narrow down providers that best suit your project needs. Keep an eye on pricing trends to take advantage of new cost-saving opportunities.

Get started with your comparison today at ComputePrices.com.

FAQs

::: faq

What are the differences in pricing models among cloud GPU providers, and which option is the most budget-friendly for long-term AI training?

Cloud GPU providers offer several pricing models, including on-demand hourly rates, per-second billing, and discounted reserved plans. For workloads that are short-term or sporadic, on-demand or per-second billing can be a flexible and cost-effective choice. On the other hand, if you're tackling long-term AI training projects, reserved or monthly pricing often delivers better overall savings.

Some platforms are particularly appealing for their affordability in sustained use. For instance, you can find GPUs on certain services starting at around $0.50 per hour, making them a solid option for extended tasks. Additionally, providers with marketplace-style models let users customize their setups, enabling cost-effective solutions tailored to specific needs. These pricing options are especially helpful for developers and businesses aiming to stretch their AI training budgets while maintaining strong performance. :::

::: faq

What should you consider about data center locations when choosing a cloud GPU provider for AI training?

When picking a cloud GPU provider, the location of their data centers can make a big difference in latency and overall performance. Simply put, the closer the data center is to your users or where your AI model is deployed, the less delay you'll experience. This is particularly critical for tasks like real-time AI processing, where even slight delays can disrupt performance.

On top of that, some providers offer advanced networking technologies, such as high-speed connections like 400GbE or InfiniBand. These can significantly boost data transfer speeds within the data center itself. Opting for a provider with well-placed data centers and strong infrastructure can help your AI workloads run more efficiently and respond faster. :::

::: faq

How do different GPU options and configurations impact the speed and efficiency of AI training?

The GPU you choose and how you configure it can make a huge difference in the speed and efficiency of AI training. High-performance GPUs like NVIDIA's H100 or A100 come equipped with advanced Tensor Cores and high memory bandwidth. These features allow them to process large datasets and complex models much faster, cutting down training time and boosting efficiency.

For even greater performance, running multiple GPUs connected through fast interconnects like NVLink or PCIe can speed up data exchange between GPUs - something that's crucial for handling large-scale AI workloads. Another way to enhance speed without sacrificing accuracy is by optimizing configurations to use lower precision formats, such as FP16 or FP8. Choosing the right GPUs and fine-tuning their setup to match your specific AI tasks can lead to better results and smarter use of resources. :::